The Harm Assistant (Part 2 of ?)

Demonic dictionaries; Hieroglyphic Turing tests; The Dark Geppetto Rises

I got a little ahead of myself at the end of part one. That was on purpose; I wanted to give you a sense of both the technical direction the project was heading in, and the kinds of changes it would start to trigger in me.

For part two, I am jumping back into the normal timeline, when development of the conversation module (“CM” for short) was still in its the earliest stages. As far as I’m concerned, I was still fully “me” at this stage in the game. But looking back on it now, there were warning signs.

Once my digital villain was costumed in shadow critters and magic spells, it was time to begin the the real work of programming the CM: the invisible monster that would speak and be spoken to.

My first instinct was to grab something off the shelf. There were a wide variety of virtual assistants available online, some of them even portable and open source. I figured I could just rig one of those to my frontend spook-box, do a few nips and tucks and call it a day. I’ve done similar work in the past; not because I’m lazy, but because I generally don’t like reinventing wheels (and I assumed my clients wouldn’t want me billing for wheel-reinvention anyway). With this approach I thought The Harm Assistant’s riddles and uncanny answers would more or less function as a repurposed version of a helpdesk database.

After a two days of hunting for such a third-party candidate, I felt very deflated. In the few instances where they didn’t want to charge a monthly service fee on some proprietary bullshit, integration looked like it would be pure hell, requiring so much reverse engineering that I might as well code something from scratch. So that’s what I decided to do.

But where to begin? I was somewhat versed in the theory of linguistic engines, but from a practical standpoint I was the greenest of greenhorns.

String Validation

I decided to start by building an elementary parser module to validate language. I wanted a system that would recognize a string (i.e. a sequence of alphanumeric characters), slice it into English substrings (i.e. “words” separated by spaces or punctuation), then identify each substring by its part-of-speech (noun, pronoun, verb, and adjective, specifically; I gave zero fucks about adverbs or articles at this stage).

Once more, I briefly hunted around for something plug-and-play. I found dictionary websites with public APIs, but this approach seemed excessive and lumbering. I may be a slob of a programmer, but even I subscribed to the KISS rule. Plus which if I have any design ethic at all it would be to rely on as few remote processes as possible. In my humble opinion, loading elements from discrete local stores is preferable to requesting services from the wild.

With that in mind, I tracked down a set of repositories that had already done the work for me. In 2007 or thereabouts, some kind soul had apparently compiled every known English word into a number of space-separated text files, each of which corresponded to a different part of speech (i.e. one file was all the verbs, another all the nouns, another all the adjectives, etc.) These files were all relatively lightweight — I think the largest was <17 MB — so I downloaded them to the client as part of the startup routine. Using this raw material, I coded a module that could quickly determine whether a user’s input string contained English words. Any misspelled words and gibberish were filtered out, as well as any articles and adverbs for now. The remainder were classed by part-of-speech and stored in active memory, in the order they appeared. For example:

I watched the quck brown fox jump obwr the lazy doog

Would be stored as:

[

[“I”, pronoun],

[“watched”, verb],

[“brown”, adj],

[“fox”, noun],

[“jump”, verb],

[“lazy”, adj],

]

As a finishing touch, I added methods for handling common contractions. For example, the substrings don’t, don”t, don;t, don:t, don”t and dont would all be replaced and validated as “do not”, even though they don’t appear in the dictionary files.

Once validation was complete and all the detritus dumped, the parser then sniffed the new substring array for words that might be of interest to the Harm Assistant. For any nouns or verbs detected, HTTP requests were sent to an online synonym generator to check if the string included any concepts that matched the demon’s interests. For example, words synonymous with concepts like violence, pain, fear, sadness, betrayal, hatred, misery, greed, destruction, chaos, etc. would register interest hits. The same logic applied to concepts that would trigger anger, fear or disgust (e.g. love, joy, mercy, beauty, forgiveness, courage, redemption, etc). To decrease the chance of misinterpretation, the parser also checked for any negating neighbors of the keyword (e.g. no mercy, cannot pardon, not miserable, do not kill). If so the thesaurus function would retrieve antonyms instead.

With this system, I figured I’d struck a decent balance between local and remote operations. To further cut down on requests and load times, I limited the GET request to the first eight synonyms, and capped user inputs at 255 characters. The latter limitation also contributed to the illusion that the user was participating in a modern chatroom with spam prevention measures. Form follows function. Neat!

The Scoring Function

When language validation and interest parsing was complete, a function would fire that generated a positive or negative integer, which would be appended to the chat as a demonic comment. The more the demon liked your word choices, the higher this score (and vice-versa for disliked words; negative scores were possible). I ranked each interest keyword (and, by extension, its eight-word synonymic cluster) on a scale of -3 to 3. I based these scores on my own moral reasoning, and on how closely I thought the word correlated to a specifically moral context. For example, both “terrorist” and “fall” were members of clusters that appealed to the demon, but I thought the latter word had more neutral usages than the former.

I played with the score function for about half a day, just to kick the tires. But because I knew how the trick worked, I wasn’t a suitably blind candidate for testing. Without telling them the purpose of the trial, I recruited a small network of colleagues to remotely feed the demon comments. On the frontend, I also replaced the numerical score with a reply that consisted of one or more emojis, corresponding to whether the demon’s reaction to the string was positive(😁), neutral(😐) or negative(😡). My reasons for doing so will become apparent shortly.

Examples:

I think I’m in LOVE[-3] with you.

😡😡😡[-3]

I think I’m FALLING[+1] in LOVE[-3] with you.

😡😡 [-2]

I watched a bird fly into a window yesterday

😐 [0]

I CAN’T UNDERSTAND[+1] why nobody wants to FIGHT[+2] Nate Diaz. He seems RIPE[-1] for the picking.

😁😁 [2]

I HATED[+3] the new Star WARS[+3] movie, but the special effects were INSANE[+1]

😁😁😁😁😁😁😁 [7]

The testers scored plenty of zeroes (😐) early on in most of their sessions, often asking many of the same vanilla questions (“Hello,” “How are you?”, “What’s your name?”, “What’s your favorite show?” and so forth). Some session data showed long delays between these early inputs. I think this suggests an interesting flaw in the methodology: unless blindness is absolute, the takers of Turing tests will immediately guess the parameters, and find themselves frozen in a kind of analysis-paralysis. After all, what do you say to a thing?

By the end of their conversations, however, the testers were interacting fairly regularly with the demon, producing more than enough scorable inputs to suggest the system wasn’t buggy. At the end of each session, the user scores for each string were tallied up. This total represented the demon’s overall opinion of the conversation (and by extension, of the user himself).

The results were fascinating. Once a test subject sensed the system was gameable, he or she quickly tried to run the score in one direction or the other. Oddly enough, nearly three quarters of the users in this group tried to please the demon, rather than to piss it off. The high score for this testing phase was a blistering 78; the moment this user sussed out the game’s parameters, he was off to the races with loose talk of bloodshed, bombings, rapes, murders, suicides and more.

For the second round of testing, I added a new layer of stratification to the scoring metric. In addition to liking or disliking a concept, the demon would also tag concepts as:

🤣 funny

😍 sexy

🤪 dumb

😱 scary

Examples:

Yesterday we went on a hiking trip and found a DEAD[+3][😍] body. His throat was SLASHED[+2] [🤣], so I’m pretty sure he was MURDERED[+3][🤣].

😁😁😁😁😁😁😁😁[8]

😍🤣🤣

I saw an ANGEL[-2][😱] in my room last night. He told me that GOD[-3][😱] LOVED[-3][🤪] me, and that the DEVIL[+3][😍] was a LIAR[+2][😍]!

😡😡😡[-3]

😱😱🤪😍😍

The goal here was to provide some additional context to the overall opinion the demon was forming. Once the demon was speaking English, it would factor these scores into its responses. For example, if a user boasted a high enough 😍 score, the demon might begin to sprinkle in sexual innuendo and flirty asides. On the other hand, a user who spoke often about love, faith and spirituality might be subjected to angry outbursts, and engage in conversations that are hastily cut short.

All in all, I thought this phase of development had produced a decent foundation:

The demon could both recognize English words by part-of-speech and abstract them into broader conceptual categories. Ik

By replying to user inputs instead of just randomly commenting at intervals, the rudiments of a structured conversation were now in place.

I’d assembled a pool of QA testers that could provide regular feedback and assist me on bug hunts.

The scoring function laid the groundwork for the demon’s long-term dynamic memory, including its evolving opinion of users over time.

It also formed the basis of the project’s hidden gamified aspect; the demon’s opinion would play a significant role in determining whether or not it was able to “possess” you and escape into the material world. And upon analyzing that early scoring data, it seemed such possessions were more easily achieved than I would’ve guessed. I tried not to dwell on this; people wrote all kinds of hideous things behind the veil of anonymity. It was a “bathroom wall” mentality, nothing more. That’s what I told myself, at least.

Anyway, that’s the basic story of phase one. I guess a poetic observer could say I was beginning to chisel the shape of the Harm Assistant’s psyche, but that’s bullshit; a conversation module cannot carry psychological content, no matter how advanced the clockwork. All of its so-called “interests” were just pale reflections of my own Jungian Shadow. I was like a Dark Geppetto, carving bits of his id onto a mechanical puppet.

(That’s also “what I told myself, at least.”)

Intermission #1

Throughout development, I would intermittently force myself to step back from the code, to contemplate progress and ponder next steps. The end of the emoji testing would mark the first of these intermissions, taking up the better part of two days.

During the daylight hours I mostly sat on the patio, slaughtering insects and reviewing the test results in my mind. By night, I would sit in the quiet darkness of the tiny bedroom, scouring the web for articles on linguistics, pattern-recognition, syntactic structures and the like.

The first major obstacle was guessing the context and themes of user input. The testers often included proper names, morally neutral concepts and other zero-score nouns and verbs into their strings. This would make it difficult to produce a fill-in-the-blank, Mad Libs-style response — which was my initial plan — because often times it was these unscored words and concepts that represented the main subject of the string. To guess user intent, search engines work largely on the principles of priority (the order of words and phrases) and prevalence (the number of instances that match the priorities in the wild). I thought maybe there was a thread to tug on there.

But there were other problems. For example, even when a string was littered with concepts that sparked the demon’s interest, its reactions yielded plenty of nonsensical results. For instance, in the “Star Wars” example above, the user wasn’t using the words “hate”, “Wars” or “insane” the way the demon wants him to. I also find the Star Wars sequel trilogy to be irredeemably awful, practically an inversion of the creativity and timeless themes of the original films. By contrast, the demon should adore these shitty new films, and act as outraged as any Disney executive at the sentiment expressed. Instead, the string scored a cheerful seven 😁’s on its “like” scale.

Merely liking/disliking certain categories of words without being able to identify their collective intent would regularly blow the gaff. In its current format, the demon was rather like Tipper Gore’s PMRC: a schoolmarm mechanically scanning content for words that were naughty or nice. but otherwise oblivious to their intended meanings. Further classification into sub-categories of emotion did nothing to address this core problem; context doesn’t magically emerge from emotional reactions to individual words, but from the invisible mechanics of grammar that tie them together. And even that description is a stretch; the miracle of human communication isn’t something we can easily describe, let alone simulate.

This problem of context identification was of course nothing new. AGI engineers around the world were paid handsomely to attempt this magic trick, and I’d found even their best solutions thus far to be sorely lacking. It usually took me less than five minutes to trick a public-facing CM into revealing that it actually had zero clue what I was talking about. And since the designers of those CMs would likely blow me out of the water on every measurable scale of intelligence, what possible hope could I have of achieving even that meager level of illusion?

On the second day of intermission, I woke up very depressed. Betina noticed (she always notices) and offered to make us steaks for breakfast. I thanked her, but the truth was I didn’t think I could keep anything down. I’d stayed up drinking bourbon well past midnight, practically mainlining the shit into my blood. So I had a dog-hair breakfast on the patio instead, and began to conduct a scathing review of all my supposed “progress”.

My team was blown away by what I’d shown them so far. Their applause only made me feel like even more of a fraud, having gassed them up with false hopes. It was true I’d been working at a blistering pace. The phase one CM had taken fewer then twenty days to build, including the spook box with all its bells and whistles. But to what end? It was becoming the same old bag of tricks from my “sexy demo” days, deploying mirages to whip up excitement and stall for time. Except now it was for a project I truly believed in, and good people who put their faith in me.

While I was thinking this, something funny happened.

I’d just finished beating up my brain and liver with a third pour of breakfast whiskey, and gone back to bug patrol. Betina had reloaded our Super-Soakers that morning, so I grabbed one and scanned the scenery.

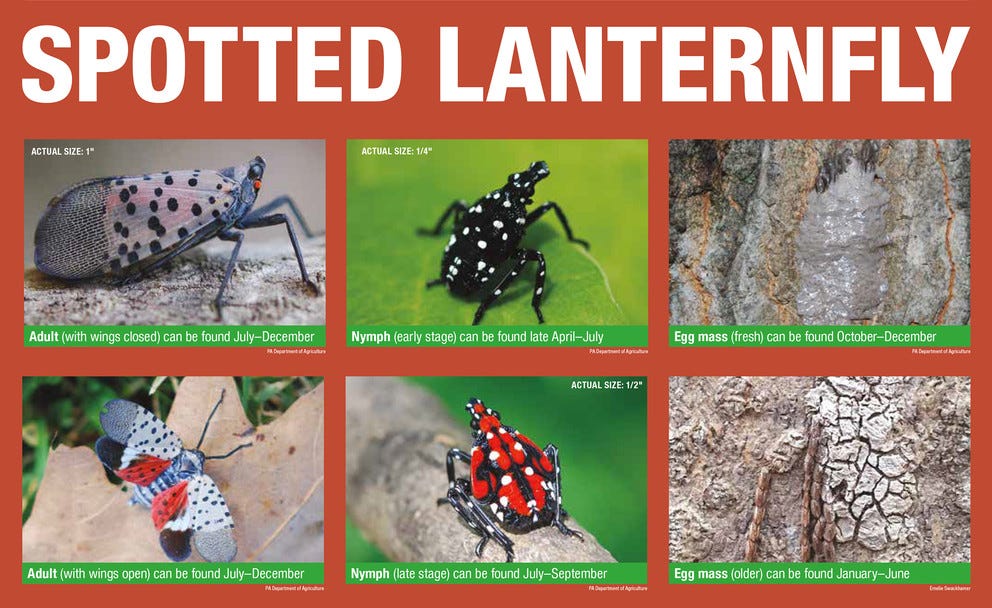

I sighted a number of bogeys almost immediately, and trained my sights on one crawling up a closed patio umbrella. I recognized this common behavior from months of observing the enemy (“Spotted Lanternfly” in the English version, though their origins are in the Far East). They loved to climb to the tops of long, thin structures — flagpoles, fenceposts, rain gutters, etc — and perch there. I thought there was something vaguely pompous about the way they perched. The human version would have his chest puffed out, his chin jutted into the air, nostrils flaring with a contempt that was almost aristocratic in character. This made it far easier for me to slay them, in more ways than one.

I waited for the creature to adopt his conqueror’s pose atop the umbrella. As soon as it did, I pumped the gun’s plastic forestock a couple of times and fired.

It was an absolute peach of a shot. Upon such direct (and ultimately fatal) hits, the lanternflies displayed another common behavior. They were flutterers for the most part, almost leaflike in their descents. But when attacked by our spray guns, they would sometimes soar directly at the source of the threat (little ol’ me, in this case).

I’d been mastering a counterattack for just such kamikaze strikes. My technique was to smack or punch them out of the sky as soon as they entered range. This would render them suitably dazed to be finished off with a stomp or squirt.

Needless to say, I wasn’t exactly in top condition that morning. I whiffed hard, and the fly collided with my chest and stuck there. In the span of one second I dropped the gun, tried to brush it off, tipped my lawn chair over backwards and banged my head on the edge of a plastic storage container. It must’ve looked pretty hilarious from the outside, now that I think of it.

I have no general fear of insects. I mostly find them fascinating, actually, and lanternflies are harmless (to humans, at least; trees are another story). But even I will admit they are freaky little fuckers, as alien in form and conduct as you’d expect from a species that was stranded 8,500 miles from home. There’s an instinctive alarm that goes off when one lands on you, like it’s triggering some dormant limbic response you never knew you had. I think this has to do with the intent of their attacks; boasting no weapons with which to deal direct damage, the lanternfly’s sole tactic is to frighten. The fear inducement method apparently evolved with such specificity that the trick sometimes works on us humans.

Lanternflies will even resort to scare tactics when they are grounded and dying, spreading their poisonous-looking wings as they stiffly and mechanically orient themselves toward the shooter. Their behavior in these moments has to be experienced directly to get the full flavor of it; they reminded me of tiny killer robots, preparing to deploy some secret weapon of last resort.

Maybe this attribute was the corollary that led me back to the Harm Assistant. After all, my monster likewise lacked physical weaponry, and relied on sheer menace to attack its foes. Whatever the case, as I lay there on the dirty red stones, the germ of a certain idea sprang to mind; not necessarily a solution, but a path forward. It struck me in a form that was almost purely non-verbal, more a pattern of geometric shapes than an ordered sequence of logical thoughts.

This sensation wasn’t new; similar revelations had struck me before, as though born in the visual cortex instead of the Logos. But if I had to sum it up in a word, that word would be:

Cheat.

(Continue to Part 3)

Note: I tried to avoid getting hung up on specific technical methods and language here; partly because I don’t see them as anything groundbreaking, and partly because I don’t want to bore you to tears. However, if you think this series would benefit from more (or even less) of such details, let me know in the comments.

P.S. If you found any of this valuable (and can spare any change), consider dropping a tip in the cup for ya boy. Suggested donation is $1 USD. I’ll try to figure out something I can give you back. Thanks in advance.

Of course I'm curious about the technical aspects (I've tried a few linguistic analyses myself in my day), but any additional technical material would probably distract from the core narrative. Less is more here.

I am more interested in your experience of summoning a real demon -- which turns out to be a more common occurrence in the realm of AI than you might think, from what I gather reading a variety of sources. The veil is thin where the virtual world is concerned. To paraphrase one of my teachers, the internet is even further away from God than the physical plane of existence.

I don't know how the project ultimately turned out, but conceptually, the vision you articulate for the project (the underlying video in conjunction with the chat feature and Harm Assistant) sounds endlessly fascinating and like a work of sheer genius, a real magnum opus if you pulled it off the way you visualized it. And then the story of you the designer, doing this work and finding yourself affected by the thing you're trying to produce, sounds like a great premise for an incredible novel or screenplay, if you were to fictionalize it. Looking forward to the next installments!

On another note, your description of the Harm Assistant makes me wonder about psychopaths and how, despite their veneer of intelligence and sophistication and charm, their personalities and lifestyles tend to be so boringly similar to each other. You meet one psychopath, you've essentially met them all. Makes me wonder, are they pretty much just carbon-based, 3D, preprogrammed Harm Assistant bots?