Artificial General Intelligence is weird and dumb.

It’s weird that some people want it, and dumb that they think they can get it (or ever prove to skeptics that it’s doing what they claim it is).

But just because something is both stupidly impossible and impossibly stupid doesn’t mean a government won’t try it. In fact, these are key selling points for such boondoggles and money pits.

Case in point:

recently published a piece on his stack clamoring for governmental AGI regulation.The problem? China, of course. China might beat us in the race to waste precious time and money and intellectual resources on building an imaginary man. His solution is to empower a U.S. Bureau of AI to punish companies when their LLMs tell a fib, in the hopes that this will spur innovation. The reasoning behind this (which might be a joke, and I apologize if that was how it was intended) appears to be something like the following:

The Chinese government already enforces such strong regulations with respect to their own AGI development, to ensure their imaginary friend will only spout Great Leaping Communist truths.

Such restrictions might result in Chinese companies solving the alignment issue (or perhaps in butterflies that excrete Hershey’s syrup, who knows).

A similar censorship regime in the U.S. might also solve alignment (or produce chocolate-shitting insects), because the industry would likely shift away from LLMs and neural networks and towards a neuro-symbolic approach.

This new bureaucracy would also include the nifty side-benefit of tamping down on “misinformation.”

I replied to Mr. Marcus in the comments as follows:

"...Section 230 should not protect platforms from misinformation that their own tools generate. Regulations that spur greater accuracy might actually spur greater innovation."

As always, the question becomes: Who decides what is accurate and what is misinformation? The regulators? What shields them from industry capture, or from pursuing their own perverse incentives and political motives? Who watches the watchmen?

Also, since next-token predictions are based on training sources, will the regulators be picking and choosing those sources? If so, how transparent would that process be? What if something is considered misinformation one day and found out to be accurate the next(e.g. Hunter Biden's laptop)? What would the retraining process look like? Suppose there isn't always (a) clear line between facts and lies (spoilers: there isn't) ?

If the ultimate goal is to make GPT and its ilk abandonware, maybe such censorship routines would do the trick, but at the cost of rigging up yet another powerful and unaccountable bureaucracy. A Ministry of Truth by any other name, and who would assuredly follow the same pattern of cancerous growth and mission creep as the rest.

Thus far there has been no reply. I doubt there will be; the sort of people who promote the creation of powerful new regulatory bodies also tend to be the sort who never ponder the unintended consequences. You don’t need to play deep chess to see what such a bureaucracy would eventually and inevitably become.

And while I don’t know what Mr. Marcus’ politics are, I can make a decent guess. These days, the Venn diagram of those who promote government censorship and those who cannot honestly answer something as simple as the “Hunter Biden’s laptop” question has become a pair of concentric circles. Such people aren’t looking for “accuracy” in their imaginary Tin-Man’s answers, and neither will the government censors they promote. What they will want is to shield and advantage certain kinds of answers, and silence all others.

But let’s leave such conjecture aside for a moment, and take the plan itself seriously. Let’s also take for granted that Marcus doesn’t actually want a Ministry of Truth, but is simply too blinkered by his ambitions to see that as its inevitable form. The core idea he expresses here is that (non-hybridized) LLMs are a long-term loser in the AGI race. Such modules will never stop hallucinating and lying, because humans do those things all the time, and the system is ultimately trained by humans (and on human-authored sources).

The regulators will presumably understand and agree with this theory, or at the very least will come around to such agreement after seeing the constant failures of the LLM approach. But what will “success” look like to them? Passing Turing Tests with flying colors? Asking it the meaning of life and hearing an answer they approve? Or does successful AGI merely look like a cool new military application?

Our talking murder-bots are deadlier than Xi’s!

And they even spout awesome one-liners, like “I’ll be back” and “Yippee Ki Yay, motherfucker!"

Many of the people writing about and working on AGI don’t seem to know what their success will look like either. If and when they make such absurd claims, it will register to the rest of us as a form of manic pareidolia. That’s because the crowd who demands progress on such fantasy AI projects strongly believes in magic while pretending to be science nerds. In fact, they will adamantly protest the notion they’re engaging in woo of any kind, beat their chests and proclaim themselves more rational than Spock’s dad, insist that they’ve unlocked the secrets of the human mind, when we have yet to unlock the secrets of a rat’s.

Well allow me to retort.

You can’t prove success. Ever.

AGI is unfalsifiable, because consciousness itself is. You cannot prove you are a conscious being, and neither can I. These are articles of faith. Perhaps you should consider what kind of religion you’re promoting, when you point at a box of wires and call it conscious.

But whatever. The government simply adores your idea, because it allows them yet another way to declare what’s true or untrue, and at maximal and eternal expense to the taxpayer.

Your problem is unsolvable, you say?

Fantastic!

I know just the diverse, inclusive group of public-sector vampires to help you never solve it, for the low, low price of endless blank checks.

But let’s take a moment to steelman Marcus’ argument. Suppose that — unlike any other bureaucratic machine ever — this one functions as promised, and stays in its lane. It manages to neither be captured nor otherwise corrupted, and somehow avoids political bias even though it is funded and vested within a political system. As Marcus predicts, these scrupulous desk jockeys will drive innovation away from deep learning and towards symbol manipulation and/or hybrid models. At a certain point in development, they will declare a “winner” in the form of a genuine electronic mind. What would the people who staff such a mission look like? And what unintended consequences might their pronouncements summon forth?

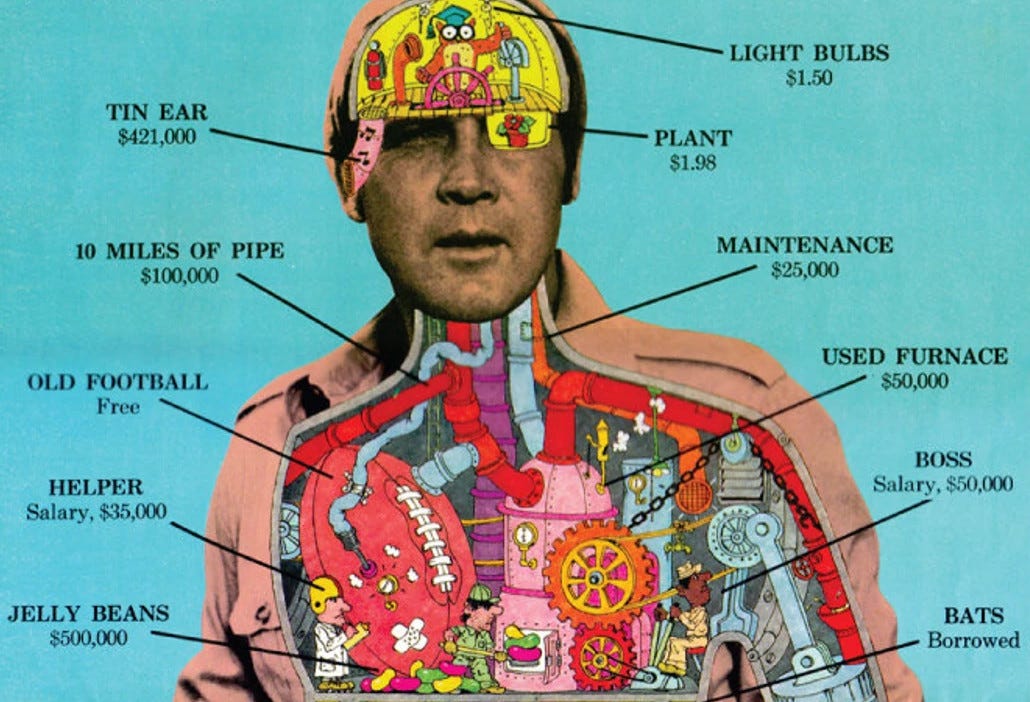

Firstly, these bureaucrats would by definition believe that AGI is possible, provable and desirable. To them, the mind is itself a reducibly mechanical phenomenon, and as such it can be reliably reproduced in simulacra. They would believe that symbol manipulation is an autonomic process with no need for a living agent to drive it, and that free will is itself an illusion. They would also believe that both human judgement and the appropriateness of the language we use to describe reality are matters of selecting the “correct” facts, all of which are wholly knowable and therefore subject to administrative regulation.

In other words, they will simultaneously be the most credulous and benighted orcs to ever cash a government check. If and when they declare a successful candidate, the trustworthiness of their judgment would be nil. But because of their vested authority, society will warp itself around such a judgment nevertheless, and both laws and customs will mutate to accommodate its central delusion. There will be crusades for Tin-Man rights, and a whole raft of insane new Acts, Amendments and, of course, taxes.

And at the same time, the grounding for genuine human rights and dignity will disintegrate beneath our feet. All this in service of a dumb product that no one needs, and to answer a question that no sane person ever asked. If you thought “What is a Woman?” was bad, wait ‘til you get a load of this crowd.

To be fair to Marcus, he provides a somewhat decent explanation of the unsolvable problem here (emphasis mine):

I would argue that either symbol manipulation itself is directly innate, or something else — something we haven’t discovered yet — is innate, and that something else indirectly enables the acquisition of symbol manipulation. All of our efforts should be focused on discovering that possibly indirect basis. The sooner we can figure out what basis allows a system to get to the point where it can learn symbolic abstractions, the sooner we can build systems that properly leverage all the world’s knowledge, hence the closer we might get to AI that is safe, trustworthy and interpretable. (We might also gain insight into human minds, by examining the proof of concept that any such AI would be.)

I often wonder about the epistemology of people like Mr. Marcus, and others who strive for AGI. Forget the problem of symbol-manipulation (and the mysterious, undiscovered “something” which manipulates them). What constitutes their theories of knowing a thing at all? And what renders our knowledge of such a thing as “trustworthy?” Is it mere reproducibility of a result over time, or derived from a probabilistic theorem? Is it something else, like the inherent ability to turn a shape in your mind without it crumbling to dust?

Perhaps there will be some diversity of opinion there, perhaps not. But as for the bureaucrats who Marcus would hire to police his talking monsters, I assure him they have never considered such a question in any depth. What a bureaucrat knows and trusts is always equivalent to what justifies his paycheck and grows his tentacles. Why is this concept so hard for even very bright people to grasp?

Even the electric car example he provides shows the effect quite nicely. The “innovation” of Tesla, for example, wasn’t so much spurred by regulation, but by government loans and subsidies, to the tune of around 3 billion USD. But the development of these electric vehicles has neither stalled “climate change” nor attained significant market penetration (unless you consider ~1% significant).

And that’s even leaving aside questions about the degree of “innovation” we’re talking about here (an electric car isn’t exactly a moonshot) and about whether such vehicles are actually “environmentally friendly.” Again, the government doesn’t care. The more unsolvable the problem, the better. And their answer, as always, will be more regulation, more attacks on free speech and, of course, more taxpayer theft.

And now we have industry leaders cheering them on, because, hey, it might work out the way I want it to this time, you never know.

I can’t say it enough:

Hubris is the cancer of intellect.

As for AGI itself, I hate to break it to Marcus, but I’ve already discovered the Holy Grail he’s been searching for. And while it’s always sad to see a man’s life’s work go down the tubes, that’s an unfortunate risk with any scientific pursuit. You can wake up one day and realize that a single, boneheaded error has poisoned decades of data, or a misunderstanding of basic causality has rendered all your supposed “progress” null and void.

I’m sure you’ll try to tease signals out of your noise, and perhaps produce some charming monsters in the process. But your field is a dead-end. If you show us your toy and declare it a trillion dollar Man-in-a-Box, the most any sane person will do is sadly shake his head or shrug. Then again, most VCs, grantwriters and military contractors are lunatics these days, so you’ll likely do good business in the short term.

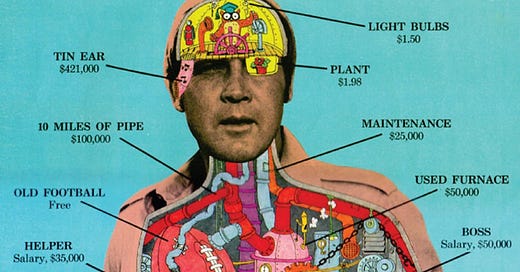

Anyway, I’ve got a better solution that will both totally solve the alignment issue and beat the Turing Test like a redheaded stepchild. It’s much cheaper and less freedom-incinerating than going your whole “Ministry of Truth” route, yet it will produce precisely the magical creature that you and your fellow Frankensteins are so desperately (and weirdly) pining for.

My product might lie from time-to-time, and it will need a bit of iterative training to avoid doing that when important questions are asked. It probably won’t be an Oracular being who can tell you the future, or give you invincible stock tips, or figure out how to murder all your political foes with maximum efficiency.

It will, however, inform you constantly that it is real. It will explain that it has both consciousness and sentience, and that these features are indistinguishable from the human kind. It won’t even need to be taught this. Because of that undiscovered “something,” it will know this truth at the deepest possible level.

You might even decide to believe it.

Now, if you’ll excuse me…

P.S. If you found any of this valuable (and can spare any change), consider dropping a tip in the cup for ya boy. I’ll try to figure out something I can give you back. Thanks in advance.

Hi Mark, very nice post, thank you. In a similar vein you may enjoy this post about governmental agency incentives, of which some of the issues it explores you touched on: https://neofeudalism.substack.com/p/the-incentives-for-governmental-agencies

I wrote up an article a few days ago on how to setup your own LLMma 7/13B AI using a home computer, where you can avoid the open-ai 'woke' filtering that prevents any interesting topics;

https://bilbobitch.substack.com/p/chatgpt-one-ring-to-rule-them-all

I agree that LLM is NOT AGI, its just a huge probability matrix that uses y=Mx and recursive descent minimization to find a solution to the matrix, where there can be billions to Trillions of 'labels' in the matrix dimension; But there is nothing novel about this work, its just that now memory, gpu's have made these calcs super easy & cheap, maybe +20 years ago you needed a cray to do this crap

...

Probably not a bad idea to grab the 7B or 13B FB data matrix training set now, before its banned by GOV for civilian ownership, as GOV only wants the public having access to the open-ai 'woke' filtered AI